Recently I decided to daily switch my main computer off. This computer was usually on all time, and consume a lot of electricity. So, I switched to a really small computer for common task: ssh-server, wake on lan (for my main computer), VPN access and mail relay. This new computer consume 7watts but his specs are : Geode CPU at 300Mhz, 128Mb of RAM, and 40Go of HD. Yes, that’s really low, but far enough for attributed tasks. I randomly log on this for external to access all computer inside my home network.

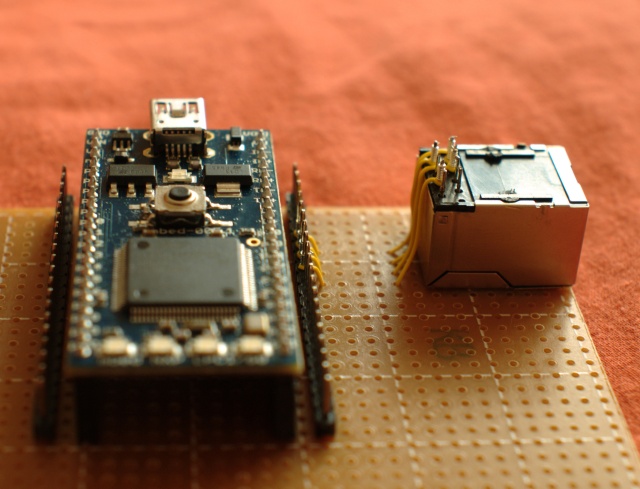

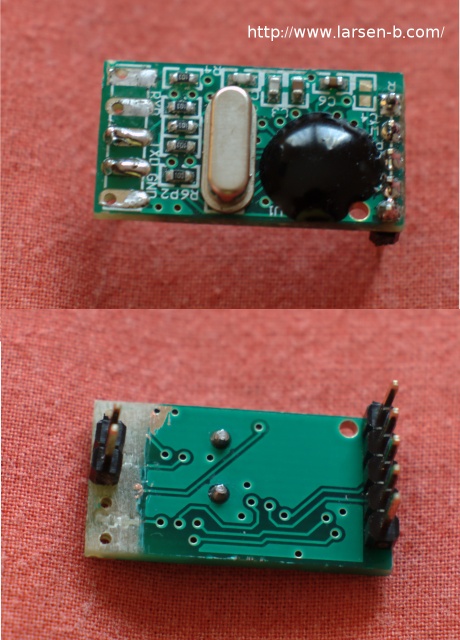

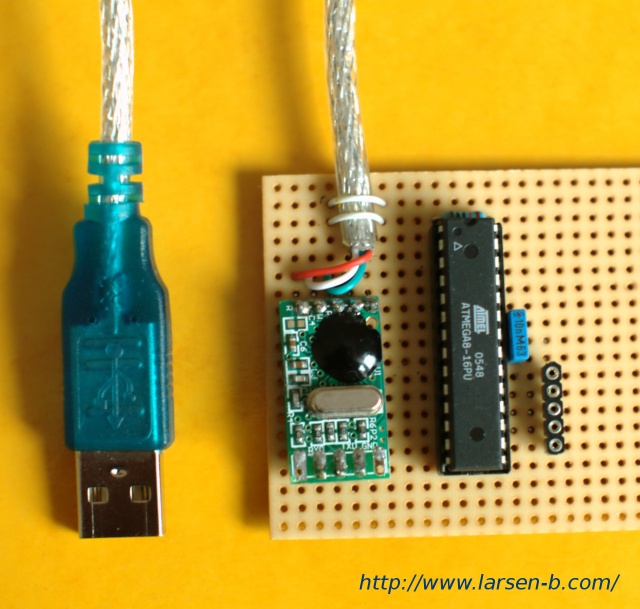

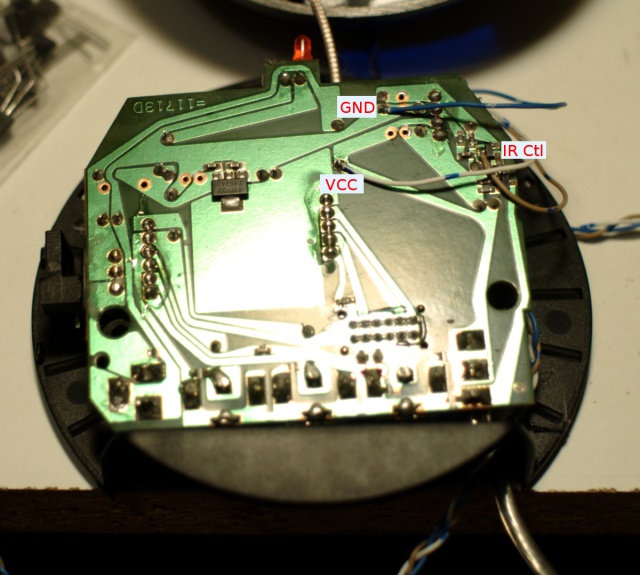

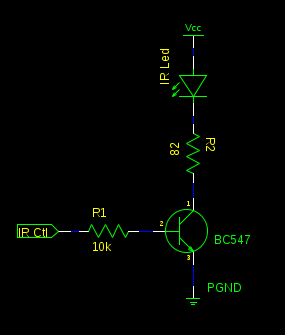

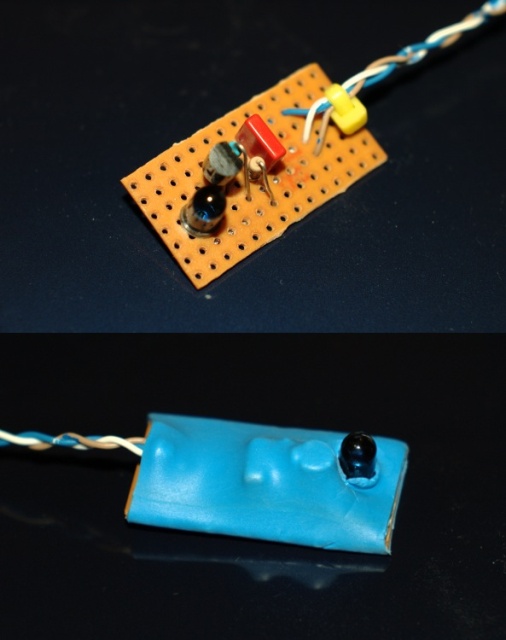

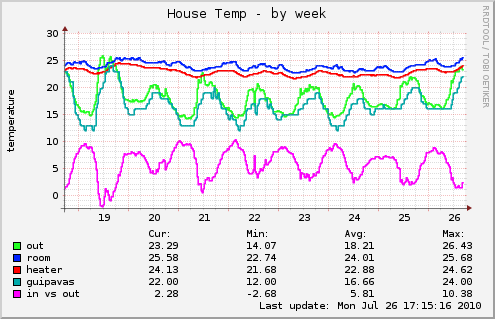

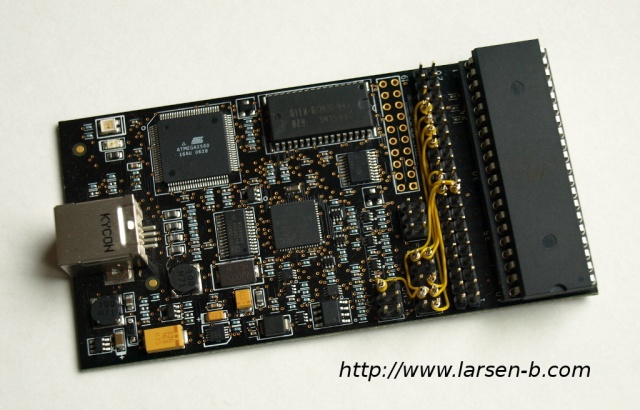

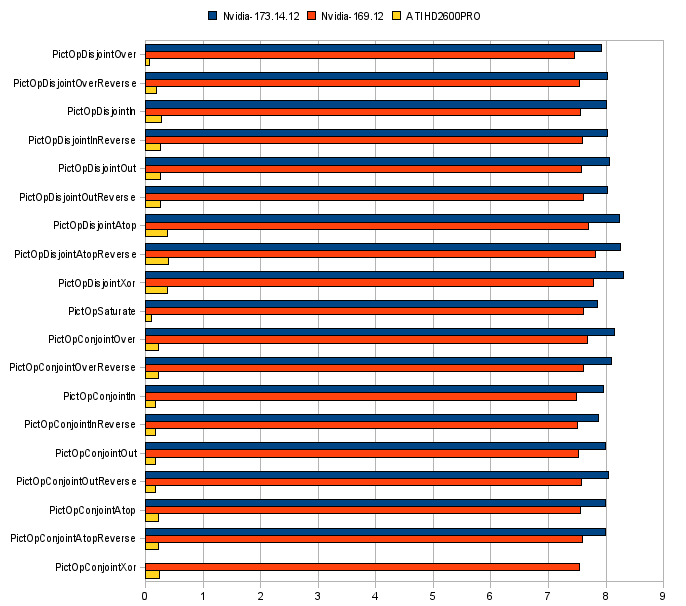

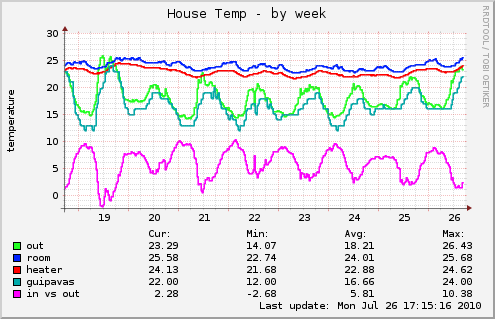

The main issue here, is that I used my main computer to monitor an 1wire network of external, heating and rooms temperature. I used a small Arduino card and a couple of Python scripts to populate some munin graph, like this one:

As you can see on this graph, I use a reference temperature from Guipavas. This stuff is public, and I use the Weather.com for the info. All works fine for about an year now. But when I switched to my new little box (300Mhz..) the python script used to monitor the 1wire network and gather weather.com reference was a bit heavier than excepted for this little box.

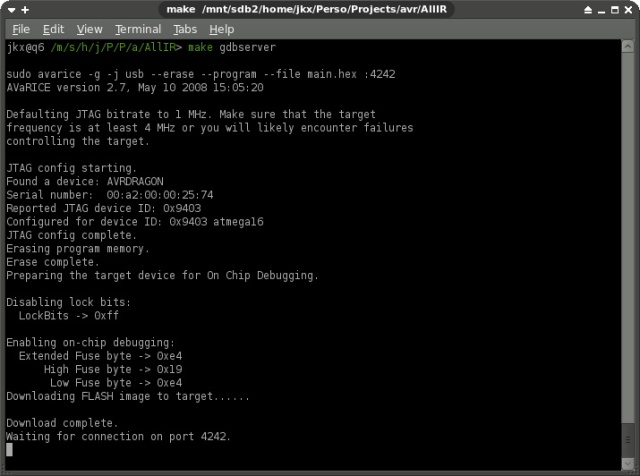

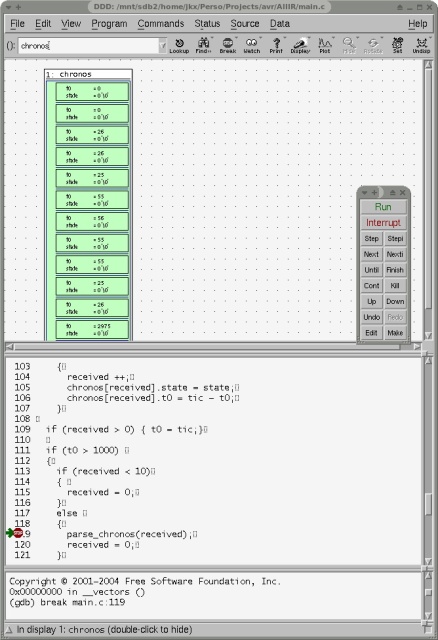

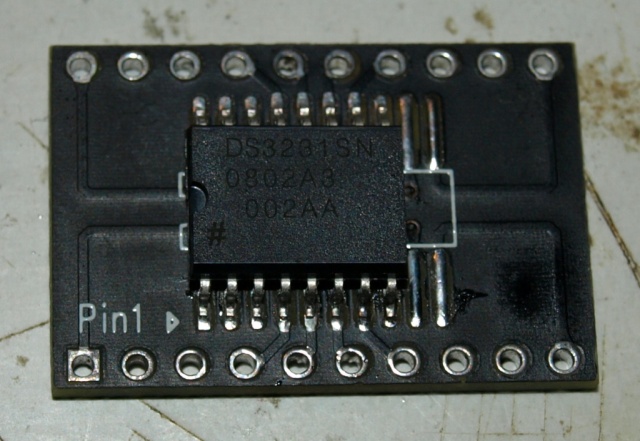

I first thought to rewrite this in pure C, but having to deal w/ xml parsing (libxml) and Posix serial in C .. That’s the little story, I decided to rewrite this script (and other) in Vala. I will not dump the Vala introduction here, but to be short it’s a new language that produce C used by Gnome Desktop. The syntax tend to be a C# like, and it has a lot of libraries and doesn’t need the bloat of an interpreter (nor VM). My first test was to listen to the Arduino serial port.

public void run()

{

ser = new Serial.POSIX();

loop = new GLib.MainLoop();

ser.speed=Serial.Speed.B38400;

ser.received_data.connect(parseSerial);

loop.run();

}

I used a Serial.vala wrapper found on the net, this is simple and neat. Just added some string parsing, and I get my Arduino 1wire network working w/ Vala .. The next is the Weather.com parsing which will be covered in a future post.

To conclude, the Vala result is fine. The result binary is small 38KB, it has quite a lot of dependencies (libsoup,glib, pthread, gobject..) and consume more memory than my python script. Python interpreter + Elementtree (xml parsing) + pyserial eat around 8.9MB of RAM, while the my Vala code eat 12.3MB. But keep in mind that’s this is with all the shared libraries. So, if you use a couple of script like me, this memory isn’t a big deal, because it will be used across different process without any overhead.

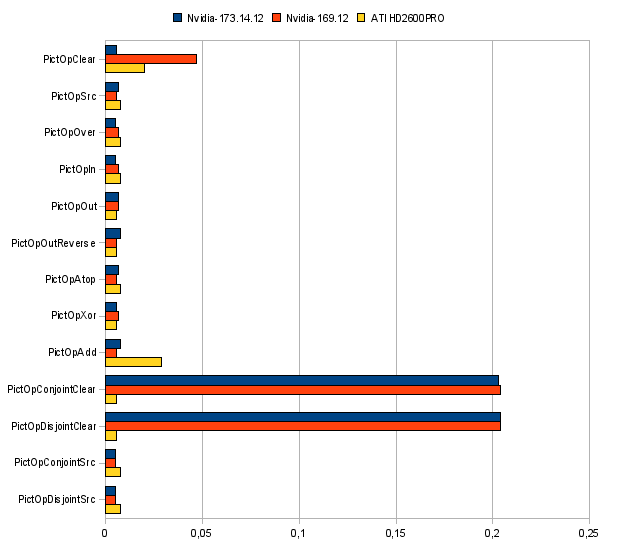

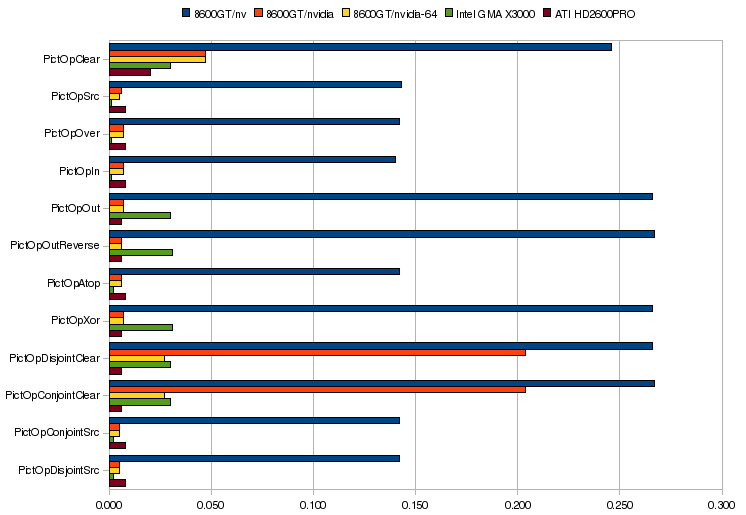

In meantimes, the main difference between the two version is the speed, here come some results with the time command of the weather.com functions only (I dropped the serial IO stuff for this test) :

jkx@brick:~$ time python weather.py

Temp: 20

Pres: 1021.0 hPa

Wind: 19 km/s

real 0m2.105s

user 0m1.468s

sys 0m0.216s

jkx@brick:~$ time ./weather

Temp: 20 deg

Pres: 1021.0 hPa

Wind: 19 km/s

real 0m0.427s

user 0m0.084s

sys 0m0.032s

Ok, Python takes 4x the Vala time for the same stuff. Of course this piece of code isn’t exactly the same, and evolve an network access, but I tested this a couple of times, and the result is always ~ the same, so I decide to look closer, and found that despite Python interpreter load quite speedy, ElementTree + urllib2 take 1.35sec to import

I get it, this system has a really small CPU and importing libs from harddrive takes times .. which doesn’t occur with my Vala code, the binary is small, and all dependency are already loaded by the OS itself. To conclude, Python is still my favorite language but running python script on small system has an overhead which I must take care, and avoiding loading / unloading libs is the key. A single python process, with some script loaded will be a better choice. And for small custom apps used on this kind of system, Vala seems to be a good alternative.

// Enjoy the sun