Ha mais c biensur :) Si j’avais su ..

Ha mais c biensur :) Si j’avais su ..

J’ai pas pu résister :

Je crois que grâce à Zope et Plone on peut faire des sites de gestion de contenu ?

Je pense me mettre à Zope justement pour les possibilité de gestion de contenue, mais pour le site que je veux faire j’aurai besoin de php et Mysql (pour une application flash…)

réalisation sans trucage / source linuxFr / section fo t’il répondre à toute les questions ?

A l’approche de Noel, il faut préparer le bar, bien veillez à ce qu’il ne manque rien, on ne sait jamais :)

Welcome to the VaxBar: http://www.lpl.arizona.edu/~vance/www/vaxbar.html

Cela faisait un moment que je n’avais pas lu Maïa, mais je dois avouer que ce soir.. préparant les cadeaux (si si Chris a pris de l’avance..) je viens de jetter un coup d’oeil à sa lettre au père Noel.

Je dois avouer que cela est assez vrai et qu’en tant que CDD moi même, je me demande si je vais pas lui voler sa conclusion.

“Alors, Père Noël, viens pas la ramener auprès de moi maintenant. Les gens comme toi ne font pas de cadeaux.”

My friends buy me a lot of things for my birthday:

The Eye Toy is really a silly collections of games driven by the webcam. After a afternoon of jump / kick / box in front the webcam, i decide to look at the webcam. After a little search on the net, i discover this webcam use a supported by linux with the OV511 driver

Je viens de faire l’aquisition d’un appareil photo HP SmartPhoto 735 (en cadeau de Noel pour ma chéri)

Les plus:

Les moins:

En conclusion:

Attention donc aux prix des accessoires qui ne sont pas fournis. Et personnellement je l’ai retourné chez le vendeur car le soir de Noel, j’ai utilisé 2 jeux de piles pour faire 25 photos et les transférer sur le PC. Le Sony DSC-P72 qui coutent 80? de plus est beaucoup mieux fourni en accessoires et surtout l’autonomie n’a vraiment rien à voir.

Même si au final l’HP fait des meilleures photos, je n’ai pas réussi à ménager mon énervement face à tous ces problèmes.

PS: Merci à Julien de Darty Brest qui a bien voulu me l’échanger (même si je m’étais basé sur ces conseils)

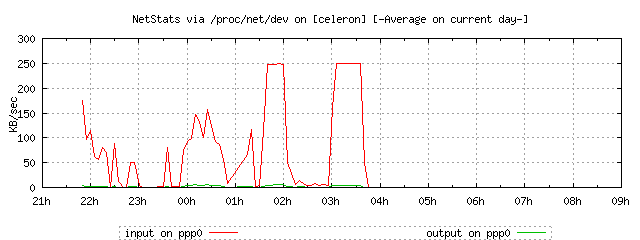

Voila c fait, j’ai du 2Mb à la maison :)

Bon d’accord ca sert pas à grand chose. Enfin télécharger l’iso de FreesBie en 45 min c’est kool quand même.

Pour les curieux:

J’ai télécharger l’iso de 3 à 4 à 250Ko/seconde pas mal non ? :)

– Enjoy http://www.cegetel.fr

Yeah, last week i spent a couple of days at Brest 2004 check out here for more infos. We take a bunch of pictures (soon on this website i hope :). This was a great thing. I think this edition has less people than the previous one, so it was kool :)

Hard week: too much walk, too much junk food, not enought sleep.. That’s hollidays!

I saw severall concerts this is my opinions in french:

Mr Miossec était comme d’habitude un peu éméché .. et le concert vraiment sympa. Seul pb il y avait bcp trop de téléphone portable et autre pétasse qui tentait en vain de s’encanailler avec le marin du port. Moralité, le concert s’est vite transformé en café du port, et ça c’est null.

Alors eux, ils ont failli se faire lincher pour ne pas avoir chanté l’apologie.. Les reprises étaient vraiment sympa, et les nouvelles chansons vraiment bof. Il va falloir attendre le prochain album pour se faire une vrai idée.

Ok.. yes, the latest Placebo concert last monday was great. (really great) While thinking about this post, i decide to build a forum for the town i’m in. So you can find more infos (en français) here

As you can see this is a phpBB website, since as usually i was unable to find a good python product to handle this forum. Not really a big deal, but anyway .. I’m on hollidays, and i don’t want to enter in big technical stuffs right now. It works that’s it.